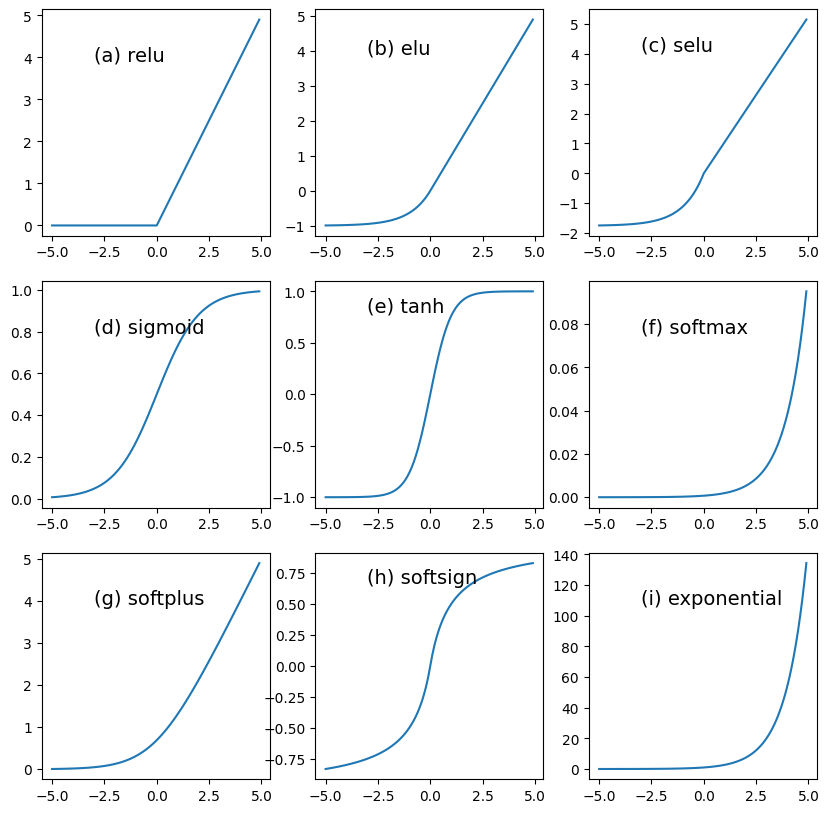

This example pytho program define the popular activation functions and display the functions.

Code:

import numpy as np

import matplotlib.pyplot as plt

#-------------------------------

# define the activation functions

def relu(x):

v=x.copy()

v[x<0]=0

return(v)

def sigmoid(x):

v=1/(1+np.exp(-x))

return(v)

def softmax(x,values):

exponent_sum = sum([np.exp(x) for x in values])

return(np.exp(x)/exponent_sum)

def tanh(x):

v=(np.exp(x)-np.exp(-x))/(np.exp(x)+np.exp(-x))

return(v)

def softplus(x):

v=np.log(np.exp(x)+1)

return(v)

def elu(x,alpha):

v=x.copy()

v[x>=0]=x[x>=0]

v[x<0]=alpha*(np.exp(x[x<0])-1)

return(v)

def leakyRelu(x,alpha):

v=x.copy()

v[x>=alpha*x]=x[x>=alpha*x]

v[x=0]=scale*x[x>=0]

v[x<0]=scale*alpha*(np.exp(x[x<0])-1)

return(v)

def exponential(x):

return(np.exp(x))

#-----------------------------

#prepare data for displaying the functions

x=np.arange(-5,5,0.1)

y=np.empty((9,100))

y[0,:]=relu(x)

y[1,:]=elu(x,1.0)

y[2,:]=selu(x)

y[3,:]=sigmoid(x)

y[4,:]=tanh(x)

y[5,:]=softmax(x,x)

y[6,:]=softplus(x)

y[7,:]=softsign(x)

y[8,:]=exponential(x)

abc=['abcdefghijklmn']

titletext=['relu','elu','selu','sigmoid','tanh','softmax','softplus','softsign','exponential']

#--------------------------------------------------------------------

#display the functions

fig=plt.figure(figsize=(10,10))

abc='abcdefghijklmnopqrstuvwxyz'

for i in range(9):

plt.subplot(3,3,i+1)

plt.plot(x,y[i,:])

plt.text(-3,max(y[i,:])*0.8,'('+abc[i]+') '+titletext[i], fontsize=14)

plt.show()

fig.savefig('activation_functions_keras.png',dpi=150)