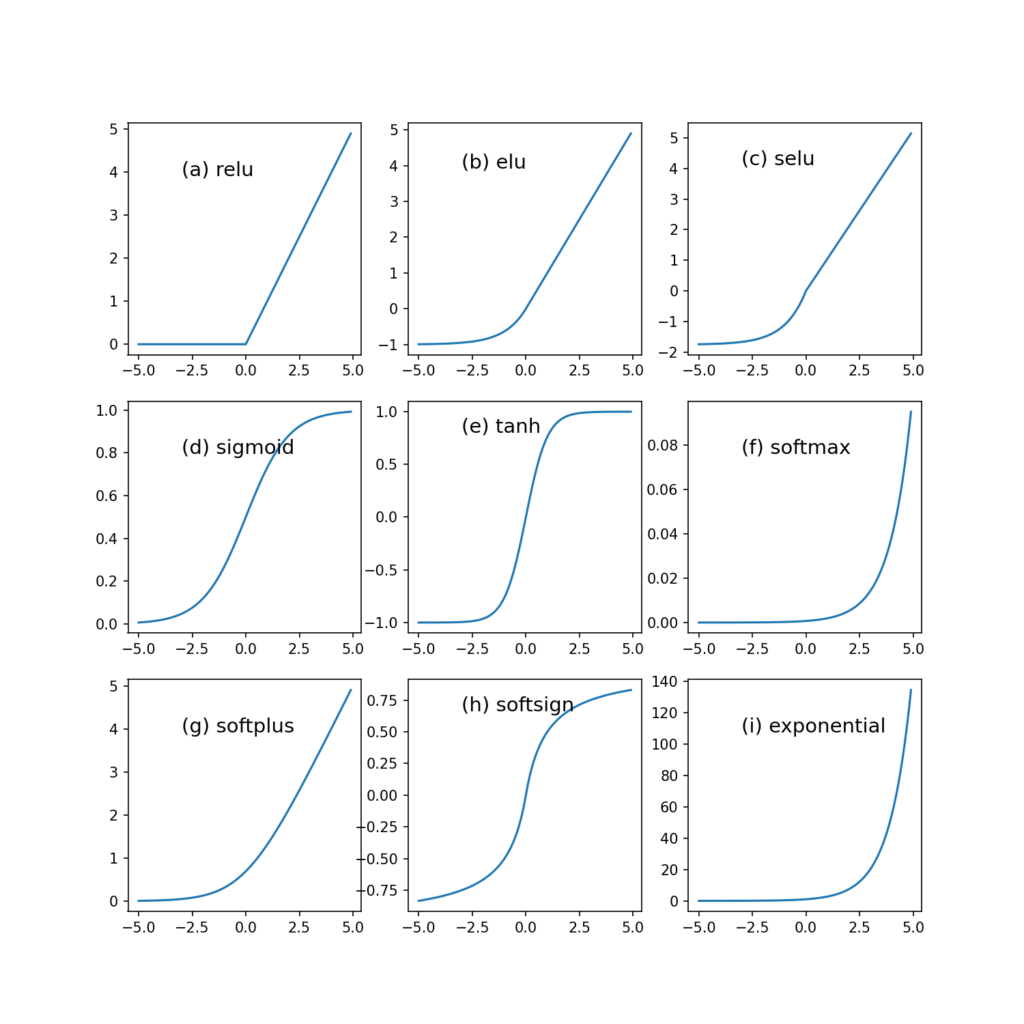

Activation function is a very important component of machine learning and deep learning. There are three types of Activation Functions: Binary Step Function; Linear Activation Function and Non-Linear Activation Functions. Available activation functions in Keras include relu, sigmoid, softmax, softplus, softsign, tanh, selu, elu and exponential. In addition,user can also create custom activation function.Below is an explanation of each function.

import numpy as np

import matplotlib.pyplot as plt

def relu(x):

v=x.copy()

v[x<0]=0

return(x)

def sigmoid(x):

v=1/(1+np.exp(-x))

return(v)

def softmax(x,values):

exponent_sum = sum([np.exp(x) for x in values])

return(np.exp(x)/exponent_sum)

def tanh(x):

v=(np.exp(x)-np.exp(-x))/(np.exp(x)+np.exp(-x))

return(v)

def softplus(x):

v=np.log(np.exp(x)+1)

return(v)

def elu(x,alpha):

v=x.copy()

v[x>=0]=x[x>=0]

v[x<0]=alpha*(np.exp(x[x<0])-1)

return(v)

def leakyRelu(x,alpha):

v=x.copy()

v[x>=alpha*x]=x[x>=alpha*x]

v[x=0]=scale*x[x>=0]

v[x<0]=scale*alpha*(np.exp(x[x<0])-1)

return(v)

def exponential(x):

return(np.exp(x))

x=np.arange(-5,5,0.1)

y=np.empty((9,100))

y[0,:]=relu(x)

y[1,:]=elu(x,1.0)

y[2,:]=selu(x)

y[3,:]=sigmoid(x)

y[4,:]=tanh(x)

y[5,:]=softmax(x,x)

y[6,:]=softplus(x)

y[7,:]=softsign(x)

y[8,:]=exponential(x)

abc=['abcdefghijklmn']

titletext=['relu','elu','selu','sigmoid','tanh','softmax','softplus','softsign','exponential']

fig=plt.figure(figsize=(20,15))

abc='abcdefghijklmnopqrstuvwxyz'

for i in range(9):

plt.subplot(3,3,i+1)

plt.plot(x,y[i,:])

plt.text(-3,max(y[i,:])*0.8,'('+abc[i]+') '+titletext[i], fontsize=14)

plt.show()

Table of Contents

tf.keras.activations.relu(x, alpha=0.0, max_value=None, threshold=0.0)

tf.keras.activations.relu(x, alpha=0.0, max_value=None, threshold=0.0) f(x)=max(x,0)

ReLU is used as the default activation function for hidden layers in deep neural networks.

tf.keras.activations.sigmoid(x)

tf.keras.activations.sigmoid(x) f(x)=1/(1+exp(-x))

Sigmoid is used in the model to predict the probability as output and used in the output layer.

tf.keras.activations.softmax(x, axis=-1)

tf.keras.activations.softmax(x, axis=-1) f(Xi)=exp(Xi)/sum(exp(Xk),k=1:N)

The SoftMax function returns the probability of each class. It is most commonly used as an activation function for the last layer of the neural network in the case of multi-class classification.

tf.keras.activations.softplus(x)

tf.keras.activations.softplus(x) f(x)=log(exp(x)+1)

tf.keras.activations.softsign(x)

tf.keras.activations.softsign(x) f(x)=1/(abs(x)+1)

tf.keras.activations.tanh(x)

tf.keras.activations.tanh(x) f(x)=(exp(x)-exp(-x))/(exp(x)+exp(-x))

tf.keras.activations.selu(x)

tf.keras.activations.selu(x) f(x)=scale*x,if x>=0; f(x)=scale*alpha*(exp(x)-1);alpha=1.67326324,scale=1.05070098

tf.keras.activations.elu(x, alpha=1.0)

tf.keras.activations.elu(x, alpha=1.0) f(x,alpha)=x,if x>=0; f(x)=alpha*(exp(x)-1),if x<0

tf.keras.activations.exponential(x)

tf.keras.activations.exponential(x) f(x)=exp(x)